Joseph Zhu

I’m a startup founder working on robotics. Over the past 3 years, I have been a cofounder and the only software engineer at Tepan, developing software for a stovetop bimanual robot that autonomously takes care of everything from ingredient prepping, cooking, seasoning, to self-cleaning.

Prior to founding Tepan, I quit on PhD from Berkeley AI(BAIR) and briefly joined Stanford. I did some research under the supervision of Jiajun Wu in Stanford Vision and Learning Lab(directed by Fei-Fei Li), and with Sanmi Koyejo in SNAP.

My tech stack nowadays

Click each one to expand:

- Neural Networks

I get down to pretty basic levels of this. Back in undergrad when working on audio there weren’t many standardized architectures, so I usually wrote my own neural networks(e.g. convolutional networks to generate attention masks, implementing transformers using cupy, using Fourier transforms to implement convolutions, recursive RNNs, inference for WaveNets). Recently, things I’ve been doing include making a custom promptable segmentation model for food ingredients based on ViT Adapter in Detectron2, and training 3D networks that use the heat diffusion process to propagate features on meshes.

- Information theory/Generative Models

I’m pretty familiar with the theory in general, getting into the nitty gritty details of the whole derivation for diffusion models, flow matching, consistency models, and VAE, by re-deriving everything from scratch. I wrote a paper called HiFA for my side project that broke down the score distillation process of a diffusion model into a pseudo ground-truth generating process. This paper has been cited 100+ times within its first year.

I also love reading general probability theory related papers. My favorite one is GAIL, but I haven’t 100% understood the proof yet.

That said, I am ashamed to admit that “A Mathematical Theory of Communication” has been on my reading list for 5 years now and I still haven’t read it.

- AI Infrastructure

I’m usually forced to figure this out since I’m the only software engineer at Tepan. I’ve managed all the clusters we had on GCP and spent $250k compute credits within the past year. That includes fine-tuning a DINOv2 using around 64 L4 GPUs for 10 days, while writing custom fine tuning code. Other than that, I delved deep into FSDP model sharding and figured out nitty gritty details like “why can’t BatchNorm be FSDP’ed?”, checkpointing shards vs whole models, etc, in order to train a Maskformer more efficiently with a bigger batch size. I also wrote a torchserve wrapper for this custom promptable maskformer for efficient serving. I also wrote some TensorRT back in its early days, but it didnt turn out too well because back then there was no GPT and very little support for pytorch ONNX.

- Hardware Supply Chain and Data Labelling

Supply chain is quite important when running a hardware startup, especially if in China. That requires playing tug-of-war with suppliers, getting information and product samples, cross checking the prices, picking the right one that’s both cheap enough and good enough. I’ve built quite some familiarity with the supply chain for chips, basic brands, and a good sense of the rough range of prices for different products.

I also worked extensively with data labellers, from picking a data labelling agency to writing data manuals, and developing ways to monitor the efficiency of data labellers. I curated a dataset of 5M food images of food ingredients, with 1M of them filtered and coarsely annotated, and 50k of them annotated with segmentation masks, all with a budget of 10k USD.

- 3D modelling and graphics

I am familiar with NeRFs and differentiable rendering pipelines, in particular NVDiffRast and Pytorch3D. I’ve invented custom losses to make NeRFs sharper by regularizing the distribution of density functions along a ray, and impelemented GGX microfacet BRDFs for NeRFs with point lights(althought that didn’t work very well because it needs an environmental map, and that was computationally infeasible). Other than that, I played quite a lot with Poisson reconstruction, and recently explored using Poisson Recon to obtain a mesh that comes with albedo, roughness, and metallic textures, before throwing that into NVDiffRast for further optimization. I have recently been learning GLFW in an attempt to render a robot along with point clouds into a VR headset with high frame rates for teleoperation. - Depth Cameras, Camera Calibration, and 3D Reconstruction

I have done a lot of stuff for depth maps and depth cameras. I’ve worked with structured-light and active stereo cameras coming straight from suppliers like Orbbec and Deptrum, often with lacking functionalities that I have to implement myself, such as efficiently aligning depth streams and RGB streams of different resolutions using relative lens poses(they are actually misaligned since their camera holes are not the same, one is IR but the other is RGB), obtaining colored point clouds from those(surprisingly, some suppliers don’t even have that function built into their cameras SDKs…). I wrote a python binding for Deptrum SDK because they didn’t have one.

I also have a deep understanding of camera parameters and the calibration process. I had two depth cameras that I had intrinsics of but I needed to figure out their exact relative poses in order to merge their point clouds. I bought an AprilGrid tab from Taobao and realized that only Kalibr can could give me the corners accurately, but Kalibr doesnt let me directly calibrate the extrinsics given the intrinsics, so I had to handwrite Direct Linear Transform(DLT) + some ICP stuff + some RANSAC plane detection stuff to get the job done. I’m familiar with hand-eye calibration methods such as Tsai and Lenz as well.

- Embedded Robotics Software

The entire Tepan robot was built from scratch, the maximum level of packaged-ness we got was a custom servo for some joints, but we had to take apart even that. Therefore, I wrote PID controllers for quite a few different types of motors for STM and Arduino boards(back when I was building in my garage). Theres a lot of low-level engineering involved in this, here are a few examples. Back in 2022 I modified the arduino AccelStepper library to compute stepper pulse intervals in real time based on high-frequency waypoint inputs on a mere Arduino nano(where I won’t be able to use square root/division if I don’t want to miss a pulse) by extending this paper. Recently(this is probably the biggest engineering NIGHTMARE I’ve ever faced!), we had a custom board with which tried to run FOC on a stepper motor. There was 3 months of struggling here, where the major problems are (i) the UART communication would randomly break down, in the end there was an issue with a cyclic buffer. (ii) there is a thing called “zero electric angle” in FOC, and that was changing all the time even when it was supposed to be consistent, and it turned out that the position sensor wasn’t giving absolute but incremental angles from when it was powered on. (iii) The motor just gets stuck randomly when there was a little bit of resistance. This took the longest, initially we thought there were issues with the PWM or some backEMF stuff causing the motor to produce enough force, but in the end after countless testing it turned out that the position sensor magnet wasn’t big enough and it was causing small errors (like 0.5 degrees) in position readings, and it just so happened that stepper motor FOC was REALLY sensitive to those errors.

- Robotics Fundamentals

Tepan has a really weirdly shaped robot, with two 5 DOF arms, where one branches off from the other. I wrote custom forward kinematic with Jacobian computation using sympy, and wrote a custom null space inverse kinematic solvers(there was a teleop requirement so that I had to use euler angles to parameterize the robot, so the angular component of the Jacobian was for euler angles instead of the usual angular velocity, I had to do some tricky derivations to avoid gimbal lock). I also implemented gravity compensation from scratch.

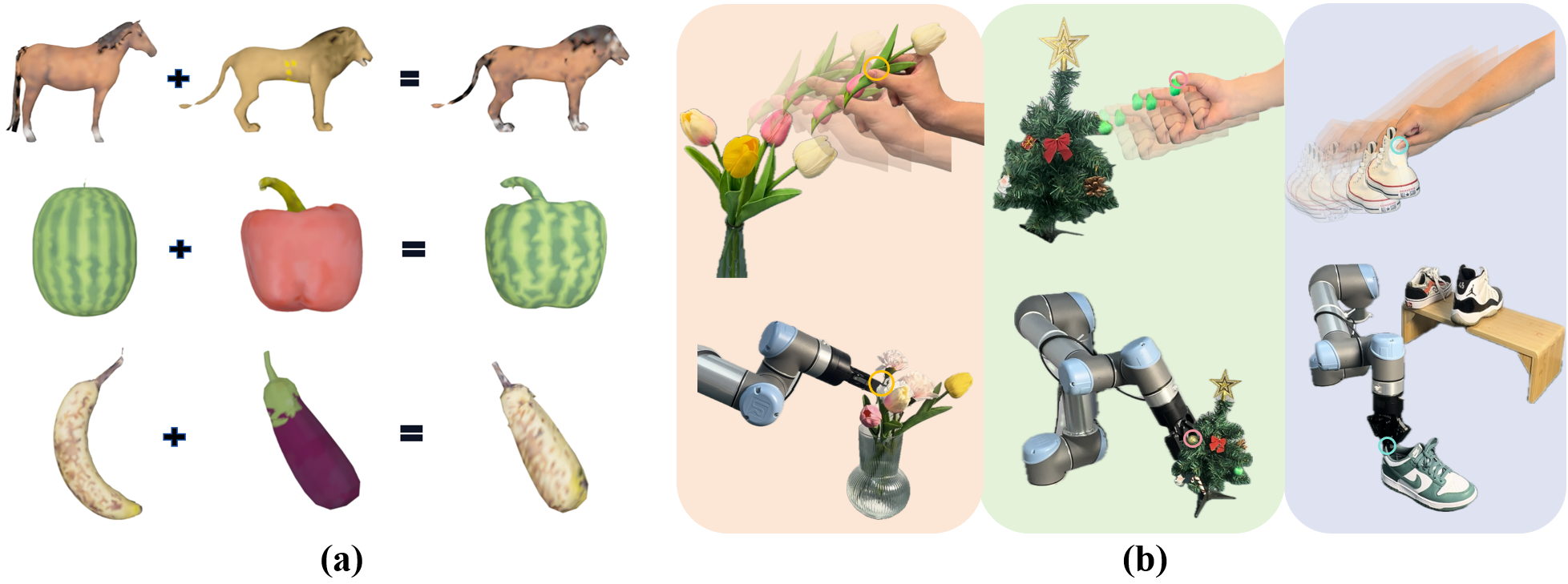

I have designed a lot of adaptive motions for the robot. For example, there would be a pre-defined chopping motion for round things that need to be sliced, but adapted onto food items of different positions, sizes, and distortions. To this end I also did a project called DenseMatcher, which finds correspondences between objects in order to map one’s contact points to another.

- VR/Spatial Computing

I have been recently writing a program that uses two oculus VR controllers to program a robot. The key challenge is mapping the controller poses to robot poses, which was kinematically constrained, and also figuring out what’s the most intuitive way to couple the two. As an intuitive example, if the user wants to pause the robot for a bit, they wouldn’t want the robot to suddenly snap when it resumes if the controller moved in between. Another example would be, should I model the controller as rotating around an origin centered at itself, or rotating around an origin centered at some external coordinate frame, and what about the robot? If users want the robot to rotate at half of the speed of the controller, how would I model this division in rotation? A lot of fun ways to manipulate SE(3) matrices under different coordinate frames. Also some tricky transforms between different coordinate conventions(Bullet vs OpenGL, COM frame vs URDF link frame, etc).

- Signal Processing

I worked on computational speech for quite some time and have a lot of in depth understanding of signal processing math, including convolutions Short Time Fourier Transforms, Wavelets, time varying IIR filters, as well as image signal processing stuff. Aside from published research, I worked on an AI audio codec in Tencent where I turned Linear Predictive Coding(a type of residual coding for compressing speech) into a differentiable layer in the neural network. Recently I have been working on functional maps, which is basically signal processing for meshes. It turns out that you can apply something similar to Fourier Transform to meshes, call Laplace-Beltrami eigenfunctions.

- Physics of Electricity & Mechanics

I did my undergrad in EE, so I understand how analog & digital circuits work on a fundamental level, and how radio/electromagnetic waves work. (Quick question: what is the difference between radiowave and laser in terms of electromagnetic field?) Thanks to the coaching of Prof. Jont Allen, I got to learn about dynamical system, and how neurons work on the electrical level. I also dabbled in fluid mechanics(Navier-Stokes for liquid, Webster Horn for gas and sound), and structural mechanics, although I’m not an expert.

Publications

I spend most of my time doing engineering, but occasionally publish research papers.-

DenseMatcher: Learning 3D Semantic Correspondence for Category-Level Manipulation from One DemoIn The Thirteenth International Conference on Learning Representations(ICLR), 2025Spotlight Award

DenseMatcher: Learning 3D Semantic Correspondence for Category-Level Manipulation from One DemoIn The Thirteenth International Conference on Learning Representations(ICLR), 2025Spotlight Award -

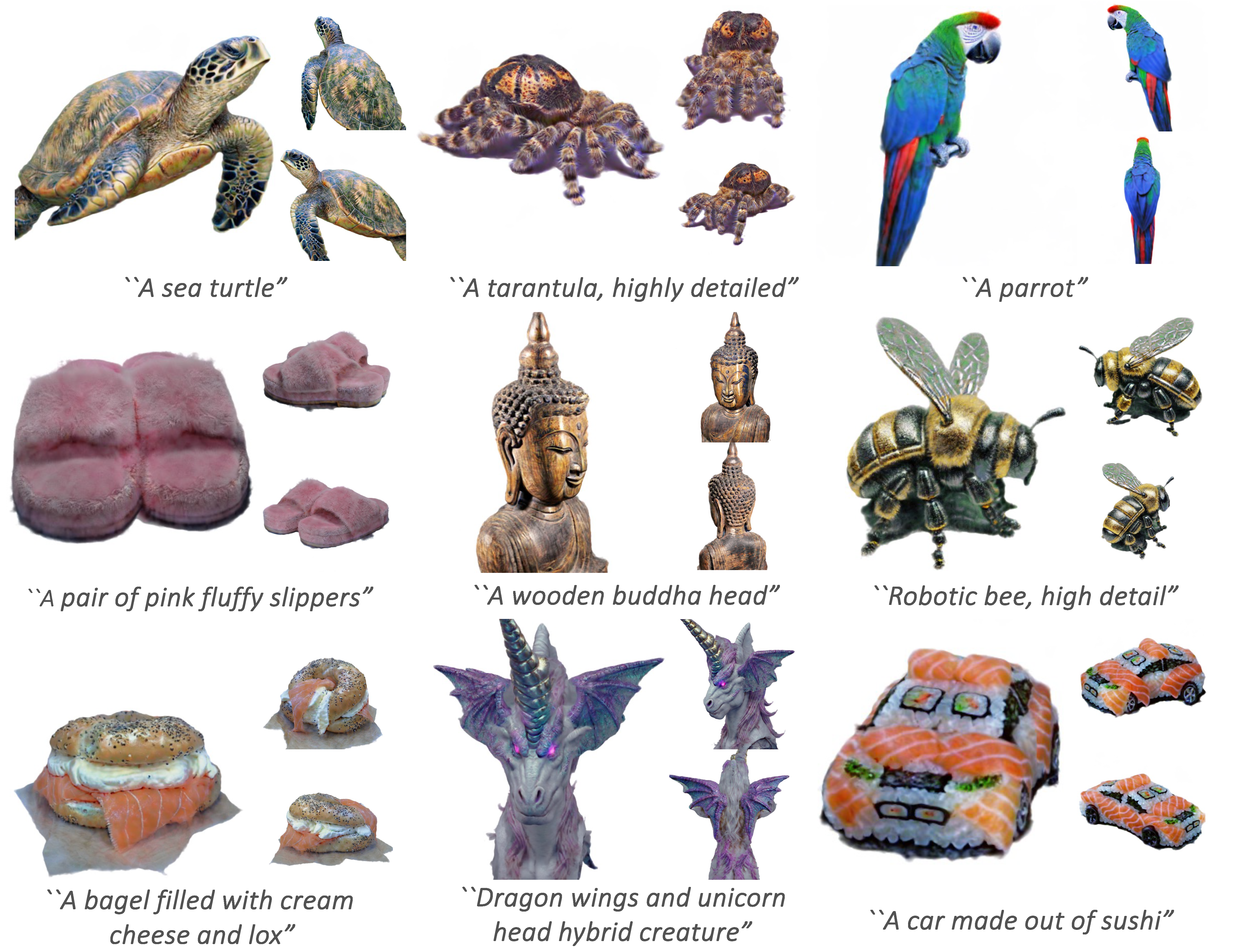

HIFA: High-fidelity Text-to-3D Generation with Advanced Diffusion GuidanceIn The Twelfth International Conference on Learning Representations(ICLR), 2024

HIFA: High-fidelity Text-to-3D Generation with Advanced Diffusion GuidanceIn The Twelfth International Conference on Learning Representations(ICLR), 2024 -

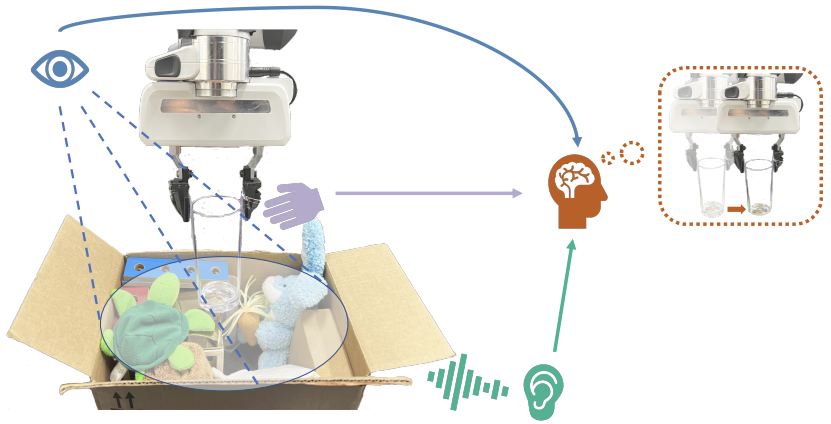

See, Hear, and Feel: Smart Sensory Fusion for Robotic ManipulationIn The Conference on Robot Learning (CoRL), 2022

See, Hear, and Feel: Smart Sensory Fusion for Robotic ManipulationIn The Conference on Robot Learning (CoRL), 2022 -

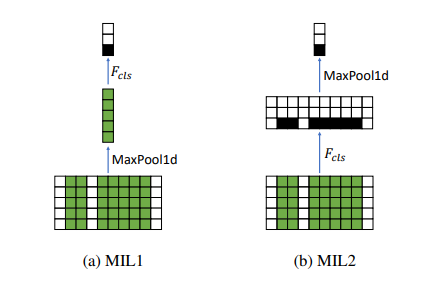

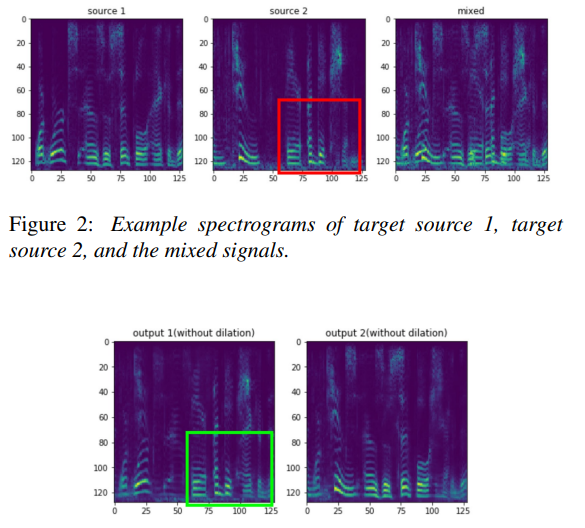

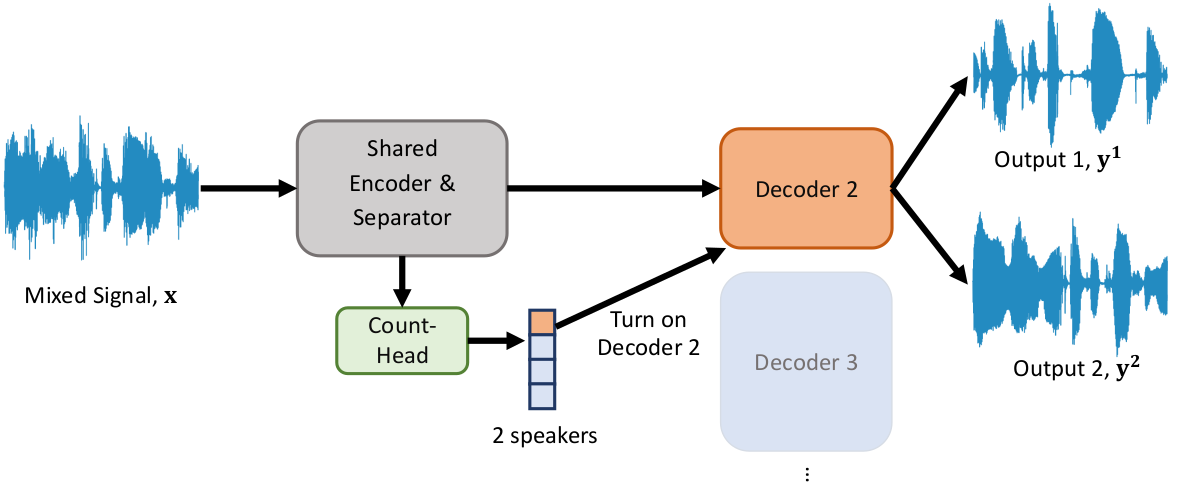

Multi-Decoder DPRNN: Source Separation for Variable Number of SpeakersIn ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2021

Multi-Decoder DPRNN: Source Separation for Variable Number of SpeakersIn ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2021

Places I worked at

-

Facebook

I was working at Reality Lab's AI team. My job was to write a distillation frameworks for activity detection models running on AR glasses. Basically, the concept is that inside Facebook, we have the data & compute to train huge self-supervised "foundation models"(like VLP transformer, etc) using video-language-audio contrastive training and such such, but those models are too big to be deployed on the AR glasses. On the other hand, the "on-glass" model are small video processing neural networks(like MViT, X3D, etc), but those have lower accuracy if trained normally with supervised learning. My job was to write a framework where we can extract features & soft probability predictions from the big models, and train the smaller models using those. This is a huge performance booster, I was able to boost the mAP on a 100+ category video classification model from 0.32 to 0.49.

I was working at Reality Lab's AI team. My job was to write a distillation frameworks for activity detection models running on AR glasses. Basically, the concept is that inside Facebook, we have the data & compute to train huge self-supervised "foundation models"(like VLP transformer, etc) using video-language-audio contrastive training and such such, but those models are too big to be deployed on the AR glasses. On the other hand, the "on-glass" model are small video processing neural networks(like MViT, X3D, etc), but those have lower accuracy if trained normally with supervised learning. My job was to write a framework where we can extract features & soft probability predictions from the big models, and train the smaller models using those. This is a huge performance booster, I was able to boost the mAP on a 100+ category video classification model from 0.32 to 0.49. -

Tencent

Encoding human speech at 2kb/s bitrate to let you have meetings in an underground parking structure/elevator. Mingled a bunch of GAN(Generative Adversarial Networks) and signal compression stuff made in Bell Labs from the 80s. I forgot to save a recording when I left the job, this is something I managed to produce halfway through the internship.

Encoding human speech at 2kb/s bitrate to let you have meetings in an underground parking structure/elevator. Mingled a bunch of GAN(Generative Adversarial Networks) and signal compression stuff made in Bell Labs from the 80s. I forgot to save a recording when I left the job, this is something I managed to produce halfway through the internship.

-

MIT

In 2019 when I was a sophomore, I was really intrigued by neural networks(not the ones you train, I'm talking about the ones in the human brain) and the PDE(partial differential equation) that governs it, so I read this book. I wanted to do research on it, so I was planning on going to go to MIT's McGovern Institue to do some research in Summer 2020. However, Covid hit, and MIT completely stopped hiring staff, so I couldn't go there in person. I winded up doing some part-time remote research. This was the code.

In 2019 when I was a sophomore, I was really intrigued by neural networks(not the ones you train, I'm talking about the ones in the human brain) and the PDE(partial differential equation) that governs it, so I read this book. I wanted to do research on it, so I was planning on going to go to MIT's McGovern Institue to do some research in Summer 2020. However, Covid hit, and MIT completely stopped hiring staff, so I couldn't go there in person. I winded up doing some part-time remote research. This was the code. -

Sensetime

-

Capital One

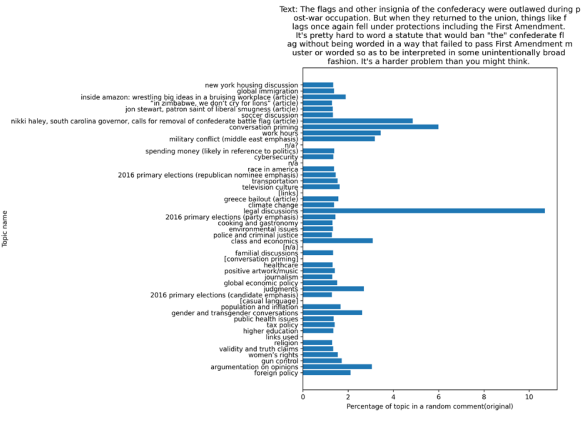

Did some NLP stuff, like sentiment analysis, in the anti-money-laundering department

Did some NLP stuff, like sentiment analysis, in the anti-money-laundering department -

i-jet Lab

I was learning things like object detection, semantic segmentation, path planning. I got the chance to work on a lot of cool projects. For one here, I am teaching a boat how to dock itself:

I was learning things like object detection, semantic segmentation, path planning. I got the chance to work on a lot of cool projects. For one here, I am teaching a boat how to dock itself:

I also made an imitation learning proof-of-concept with an omnidirectional robot for docking.

I took care of a variety of other projects, like doing NLP stuff on 600k patents, write the trajectory for a drone to take videos, classifying satillite images to analyze our users, etc.

Previous Life

I got my Bachelor’s Degree in Electrical Engineering at University of Illiois at Urbana Champaign, where I was advised by Prof. Mark Hasegawa-Johnson and Prof. Jont Allen, specializing in speech signal processing & machine learning. I did a college speedrun by graduating under 2.5 years, with the end mostly being research.

How I started doing engineering

In high school I was specializing in literature(like Shakespearean early-modern English or early 1800s American poetry). At the beginning of college, I saw GPT-2 could write essays, so I started learning python and training an LSTM on Shakespearean poetry. Later on I also played with computer vision and audio. I did an internship at Brunswick Corporation working on self-driving boats using imitation learning, and then did some research and published 4 papers about computational audio and language.

My pre-meditated dropout from Stanford

When I finished undergrad, I was accepted to the EECS PhD program at Berkely AI Reserach with full scholarship and some additional stipends, as well as CS program from Stanford. I knew I wasn’t gonna do research for another 5 years and would probably drop out soon to do a startup, so I enrolled in the latter and dropped out around a year later.